Background: Prof. Smoke spilled the beans on some top-secret functionality during the interview. The interview was actually published before its deletion.

Mission: Crack this case and unearth the elusive interview link

For all the marbles: What is the link to secret interview?

What we know about Scott’s podcast:

- He usually drops a podcast every week.

- His videos rack up thousands of views.

- On the 900th episode, a video was released, then deleted.

What anomaly in the video logs are we looking for?

- There is a week that is missing a published video.

- There is a week that has very few views.

- There is a video that has a small live duration.

First, we structure this data:

StorageArchiveLogs

| parse EventText with TransactionType " blob transaction: '" BlobURI "'" *

| parse EventText with "Read blob transaction: '" * "' read access (" Reads:long " reads)" *

| parse EventText with * "' backup is created on " Backup

| extend Details = parse_url(BlobURI)

| extend Host = tostring(Details.Host), Path=tostring(Details.Path)

We can save this into a database using .set-or-replace or use let and save this into a variable.

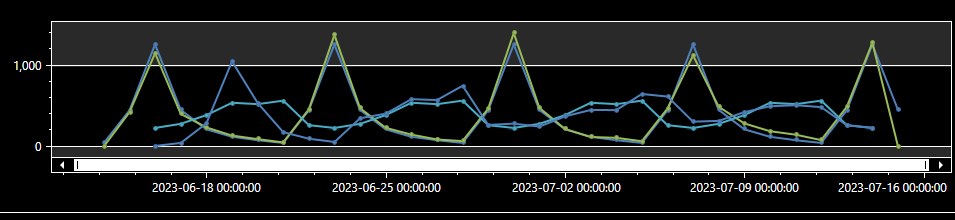

Let check for hosts in the first point in our knowledge base: Frequency of uploading podcasts.

We use series_period_validate over 7 days;

StorageArchiveLogsEx

| make-series Series_Reads = sumif(Reads, TransactionType == 'Read') on Timestamp step 1d by Host

StorageArchiveLogsTs

| extend (flag, score) = series_periods_validate(Reads, 7.0)

| where array_sort_asc(score)[0] > 0.6

| render timechart

We found one host that has 7 day periodicity! okeexeghsqwmda.blob.core.windows.net

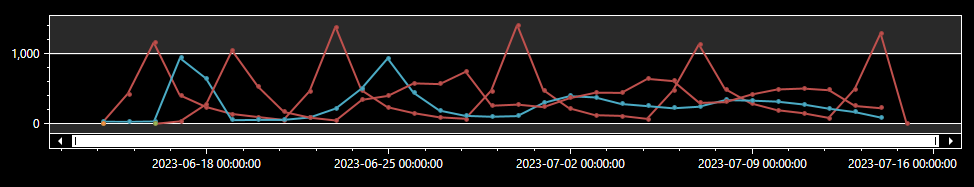

Now, we check for anomalies in the second point in our knowledge base: Number of views

We narrow down to two dates on our one host that had less views than usual.

StorageArchiveLogsTs

| where Host in ((StorageArchiveLogsTs

| extend (flag, score) = series_periods_validate(Reads, 7.0)

| where array_sort_asc(score)[0] > 0.6

| project Host))

| extend (flag, score, baseline) = series_decompose_anomalies(Reads)

| where array_index_of(flag, -1) > 0

| render timechart

For the final point in our knowledge base: Short Time To Live

We correlate the host that had weirdly less views on a particular date with a delete event.

StorageArchiveLogsEx

| where TransactionType == 'Delete'

| where Host in ((StorageArchiveLogsTs

| where Host in ((StorageArchiveLogsTs

| extend (flag, score) = series_periods_validate(Reads, 7.0)

| where array_sort_asc(score)[0] > 0.6

| project Host))

| extend (flag, score, baseline) = series_decompose_anomalies(Reads)

| where array_index_of(flag, -1) > 0 and array_sort_asc(score)[0] < -5

| project Host))

Let’s find the backup URL:

StorageArchiveLogsEx

| where TransactionType == 'Delete'

| where Host in ((StorageArchiveLogsTs

| where Host in ((StorageArchiveLogsTs

| extend (flag, score) = series_periods_validate(Reads, 7.0)

| where array_sort_asc(score)[0] > 0.6

| project Host))

| extend (flag, score, baseline) = series_decompose_anomalies(Reads)

| where array_index_of(flag, -1) > 0 and array_sort_asc(score)[0] < -5

| project Host))

| join kind=inner(StorageArchiveLogsEx

| where TransactionType == 'Create')

on BlobURI

| project BackupURI1